- 01 Feb, 2022

- Topic of the Month

1. Introduction

“The pace of progress in artificial intelligence is incredibly fast. Unless you have direct exposure to groups like Deepmind, you have no idea how fast - it is growing at a pace close to exponential. The risk of something seriously dangerous happening is in the five-year time frame. 10 years at most.” - Elon Musk

Humans have been striving since ancient times to expand, replace, and continually improve their talents with the help of artificially created tools that are not provided to them.

Man has always been able to achieve this endeavour at the technical level of the age. With the advent of the computer, the development of technology in the middle of the 20th century created the opportunity to partially replace man’s most valued trait, his intelligence, with artificial devices.

Data is already one of the most essential economic treasures, but it will be even more so in the coming years. The combination of data management, data mining, and data protection can be the cornerstones of development, whether smaller or larger companies or entire economies.

Photo by Markus Winkler from Pexels

Artificial intelligence (AI) is, in fact, the science and practice of designing software and systems capable of “smart” operation. One of its critical areas is machine learning (ML). Thanks to this, the given tool can learn from the experience gained, i.e. it can perform its tasks based on the analysis of data, among others.

AI broadly encompasses all algorithms and technologies that solve or replace intelligent human tasks. Strictly speaking, AI covers technologies that are capable of self-learning and development. AI is thus basically a set of one or more algorithms (sequences of operations). In everyday life, many people view artificial intelligence as realistic science fiction, even though AI represents a significant branch of computer science that deals with intelligent behaviour, machine learning, and machine adaptation. It has become a discipline that tries to provide answers to real-life problems. It is no coincidence that such systems are used primarily in economics, medicine, and design.

Aimless AI alone is of no value, as such systems are always related to an area or problem with an adequate amount and appropriate quality of data. Good artificial intelligence works when it can be taught with good data, and it usually depends on accuracy and success. That is, where AI works well, companies can save time and energy because technology solves problems super-fast and efficiently, allowing people to deal with even more complex problems.

2. Artificial intelligence, machine learning, deep learning

“Much of what we do with machine learning happens beneath the surface. Machine learning drives our algorithms for demand forecasting, product search ranking, product and deals recommendations, merchandising placements, fraud detection, translations, and much more. Though less visible, much of the impact of machine learning will be of this type - quietly but meaningfully improving core operations.” - Jeff Bezos

2.1. Artificial intelligence (AI)

The term artificial intelligence was first used by John McCarthy in a lecture in the summer of 1956 at Dartmouth. He was one of the greatest innovators in the field, recognised as the father of artificial intelligence for his exceptional contributions to computing and AI. However, the spread of the term is due to Marvin Minsky’s 1961 article “Steps towards artificial intelligence.”

Although many definitions of artificial intelligence have come to light over time, they all have in common that they describe computer systems that solve a problem that requires human intelligence. Perhaps it is best to cite a definition from John McCarthy, the father of AI, who defined AI as follows:

“It is the science and engineering of making intelligent machines, especially intelligent computer programs. It is related to the similar task of using computers to understand human intelligence, but AI does not have to confine itself to methods that are biologically observable.” – John McCarthy

Photo by Pavel Danilyuk from Pexels

2.2. Machine learning (ML)

Machine Learning (ML) is a branch of artificial intelligence that allows machines to learn, set up patterns, and make predictions based on the data they process. Machine learning requires an adequate data set for the learning process. ML does not necessarily need an extensive data set, though; the large amount and relevance of the data can improve the accuracy of the models.

The machine learning method is based on algorithms. These algorithms are the driving forces of the machine, which collect information and set up a pattern from raw data sets. The model developed in this way will be the basis for later computer decisions. An important feature of machine learning is that the computer is not programmed to achieve the end result, but the machine learns to recognise the patterns and deduce possible results.

2.3. Deep learning (DL)

Deep learning is part of machine learning. The term deep learning covers algorithms that allow efficient and fast processing of an ever-increasing amount of data. Artificial intelligence can also be created by pre-programming possible responses to millions of possible events. A more exciting method is to equip a machine with only the essential skills that, like an infant, start to acquire its knowledge from the ground up. These machines draw their own conclusions from their observations and experiences, so they no longer need to be programmed but taught to learn a specific area.

Deep learning differs from traditional machine learning in that it processes incoming information in multiple layers. Each layer does only its own task; for example, in image recognition, one layer only looks at the location of the pixels, and another looks at, say, the edges, etc. Due to this operating principle, the optimisation of the artificial intelligence model implemented with deep learning is very complicated, which is only possible with a lot of data and is quite computationally intensive.

The deep learning method feeds on multiple data sources and can process vast amounts of data, all in real-time, similar to the functioning of the human brain. This method is the driving force behind many applications and services that facilitate automation processes by performing analytical and physical tasks without human intervention.

Artificial intelligence by Geralt in Pixabay

Deep learning can be used successfully in voice control services, telephony applications, smart devices, or to prevent credit card fraud. But everyday examples of deep learning are the development of digital assistants (Siri, Cortana, Google Now, Facebook M, Braina, Teneo, etc.) or self-driving cars.

The principle of the method was already known in the 1960s, but even twenty years later, computers could handle such a small amount of data that the solution was not widespread. The breakthrough came just a few years ago, as today, Facebook, Google or Microsoft systems store huge amounts of data, and server parks and supercomputers provide large processing capacity.

Deep learning is currently highly effective in the field of image and speech recognition and should be used by companies with the most computing power and a wealth of data.

With the help of deep learning, it is already possible to tell the machine if a photo shows, say, a dog, but the machine cannot yet tell what kind of dog breed it is. Nowadays, even recognising visual content is only effective if the image or video is also tagged with its content. For example, search Google for images related to the English Bulldog. You will get many results, but vice versa: a machine will not necessarily find out what kind of dog there is in an image unless the image is tagged.

The American-English sci-fi film Ex Machina, presented in 2015, deals with artificial intelligence and its possible consequences. They used the so-called inverted Turing test and tried to figure out whether a machine is really a sensible, thinking creature or just playing its feelings and reactions. The test was a success, as the robot called Ava was so intelligent that it went beyond the minds of both its creator and tester.

Ex Machina – Official Teaser Trailer

And yes, we need to admit that machines take the lead over us in more and more games and logic tasks. All this is due to the so-called deep machine learning. The point of the method is not that researchers artificially equip the software they create with knowledge - instead, they teach it to think, learn, and then connect it to a more extensive database from which it can feed. Only the basic skills will be provided to the machine, which will acquire its knowledge from the ground up and become more intelligent.

2.4. The difference between machine learning and deep learning

Machine learning works with data that is already structured, labelled, so it has already been systematised to some degree. In contrast, deep learning can also process unlabelled, unstructured data, so it does not rely on labels to process information. However, it uses multi-layered neural networks, penetrating the “deep” nature of data and thus identifying and classifying it more and more effectively while also teaching itself at a more profound level.

Not even the greatest technological and physical geniuses on Earth are overly optimistic about the incredible rate of development. Stephen Hawking, for example, warned everyone: AI could be the end of the human race:

“The potential benefits are huge; everything that civilisation has to offer is a product of human intelligence; we cannot predict what we might achieve when this intelligence is magnified by the tools that AI may provide, but the eradication of war, disease, and poverty would be high on anyone’s list. Success in creating AI would be the biggest event in human history. Unfortunately, it might also be the last, unless we learn how to avoid the risks.“ – Stephen Hawking

No one knows what the future holds, especially if development is at such a pace. In an interview with the BBC, Mr Hawking also explained that the real problem stemmed from the speed and uncontrollability of development: while humans are bound by slow, centuries-millennia of evolution, once they break away from us, machines will be able to improve themselves to an astonishing degree.

“Humans, who are limited by slow biological evolution, couldn’t compete, and would be superseded.” - Stephen Hawking

Image resource: “VistaCreate" by Vitalik Radko

During a question-and-answer session at Reddit, Bill Gates explained that he is strengthening the camp of artificial intelligence opponents.

“I am in the camp that is concerned about super intelligence. First, the machines will do a lot of jobs for us and not be super intelligent. That should be positive if we manage it well. A few decades after that though the intelligence is strong enough to be a concern. I agree with Elon Musk and some others on this and don’t understand why some people are not concerned.” – Bill Gates

Inventor, engineer, and entrepreneur Elon Musk stated in 2014 that the development of artificial intelligence is like “summoning a demon”. Therefore, in January 2015, he donated $ 10 million to 37 different research projects to identify as many dangers of artificial intelligence as possible to counter the creation of potentially destructive forces as effectively as possible.

The billionaire has taken on this task because he thinks the public knows little about artificial intelligence and how much danger it can pose to humanity.

“I don’t think most people understand how quickly machine intelligence is advancing. It’s much faster than almost anyone realises, even within Silicon Valley.” - Elon Musk

If so many great minds already think that the rapid development of artificial intelligence can be a significant problem, we should believe them. It is still unknown what form our machines could start to feel and think about, utterly independently from us. According to Musk, it can be a disaster in the first place if the robots focus on self-development and simply on themselves – and from that moment on, AI will treat humanity as simple spam that is easier to erase quickly than to watch for a long time.

Elon Musk: Artificial Intelligence Could Wipe Out Humanity

Robots are also increasingly infiltrating military technology. The warfare turned enormous in 2001 when the United States began using large numbers of drones in the Iraq war.

If the direction and speed of development do not change, AI will have a similar consciousness as us, and in addition to logical thinking, they will be able to make emotional decisions, which could be the perfect recipe for the absolute end of the world.

3. Why open up to artificial intelligence?

“Everything that moves will be autonomous someday, whether partially or fully. Breakthroughs in AI have made all kinds of robots possible, and we are working with companies around the world to build these amazing machines.” - Jensen Huang, Nvidia CEO

AI can make life easier for businesses in many areas in the future. Let us look at the reasons and circumstances that make it not only worthwhile but essential to deal with AI:

1. AI is already here and will remain here

Machine intelligence has been around for decades.

2. AI speeds up various processes

Algorithms that know more and more can also help a lot in process organisation. Today, data is the starting point for almost everything. However, data collection is a labour and time-consuming task. In addition, the more information available, the more difficult it is to analyse. On the other hand, AI can think and make decisions much faster and more efficiently than humans with vast amounts of data.

3. AI can improve quality

In today’s fast-paced world, customers are more demanding than ever. However, companies must continue to stand out from the crowd in the fierce market competition. Thus, they need to provide better quality and a higher level of service if they want to be truly effective.

With artificial intelligence, business problems can be converted into data, so AI-based thinking and problem-solving capabilities can be effectively utilised in this form as well.

Photo by Jason Leung on Unsplash

4. AI can increase productivity

Businesses are increasingly looking for ways to increase labour productivity and reduce costs. The examples mentioned above also support this and demonstrate the need for AI in business processes. Machine intelligence-based solutions can help break down workforce constraints in some areas or reallocate work, allowing workers to complete more tasks in less time or capitalise on their expertise in other areas.

5. AI is capable of continuous learning and development

One of the great benefits of AI is its ability to learn independently. It means that it constantly analyses its own operations and decisions and makes increasingly mature and better decisions through the learning process.

The main types of artificial intelligence are:

- Software-based: virtual assistants, image analysis software, search engines, speech and face recognition systems

- Physical: robots, self-driving cars, drones, the Internet of Things (IoT)

4. Artificial intelligence in our daily lives

“We are entering a new world. The technologies of machine learning, speech recognition, and natural language understanding are reaching a nexus of capability. The end result is that we’ll soon have artificially intelligent assistants to help us in every aspect of our lives.” - Amy Stapleton.

Here are some examples we might not even think of using artificial intelligence.

Online shopping and ads

Companies widely use artificial intelligence to show us more personalised ads online, based on our past searches and purchases or other online activities. Artificial intelligence is crucial in online commerce, for example, for optimising products or planning inventory and logistics.

Internet search

Search engines analyse and learn from users’ vast amounts of data to show results relevant to our searches.

Digital personal assistants

Smartphones offer the most relevant and personalised products possible using artificial intelligence. Virtual assistants answer our questions and help you organise your daily routine.

Artificial intelligence by Geralt in Pixabay

Machine translation

- AI-powered robots: Sophia (Hanson Robotics), Spot, Atlas (Boston Dynamics)

- Voice-controlled AI assistants: Amazon Alexa, Apple Siri, Google Home, Emotech Olly

- Self-driving cars: Avenue Project – Sales-Lentz, Tesla, Motional, Cruise, Waymo, Luminar

- Navigation: Google Maps, Waze, Apple Maps, HereWeGo, TomTom

Whether based on written or spoken text, translation software relies on artificial intelligence to provide and improve translations. This also applies to features such as auto-captioning.

Smart cars

Although full self-driving vehicles have not yet been marketed, cars already use safety features with AI. Navigation also most often uses artificial intelligence.

Cybersecurity

AI systems can help protect against cyber-attacks and other cyber threats based on continuous data processing, pattern recognition, and attack tracing.

Artificial intelligence against Covid-19

Artificial intelligence is also used in coronavirus epidemics, such as at airports for thermal imaging. In medicine, it can help detect infection from computed tomographic lung scans. It is also helpful for collecting data to monitor the spread of the disease.

Fight against misinformation

Some artificial intelligence applications can detect spoofing and misinformation by analysing data from social media, searching for sensationalist or scary words, and determining which online sources are considered authentic.

Photo by Yuyeung Lau on Unsplash

5. Artificial intelligence in other areas of life

“The internet will disappear. There will be so many IP addresses, so many devices, sensors, things that you are wearing, things that you are interacting with, that you won’t even sense it. It will be part of your presence all the time. Imagine you walk into a room, and the room is dynamic. And with your permission, you are interacting with the things going on in the room.” - Eric Schmidt.

AI transforms virtually every aspect of life and the economy.

Health

Researchers are already studying how to use AI to analyse large amounts of health data to find samples, leading to new discoveries in medicine and improving diagnostic options.

Production

Artificial intelligence can help increase the efficiency of manufacturers, optimise logistics thanks to robots, or maintain factories and anticipate potential failures.

Administration and services

AI can issue a warning about natural disasters and help prepare effectively and mitigate the consequences by analysing data.

Machine learning by M Hassan Pixabay

6. The potential of artificial intelligence

“I’m more frightened than interested by artificial intelligence – in fact, perhaps fright and interest are not far away from one another. Things can become real in your mind, you can be tricked, and you believe things you wouldn’t ordinarily. A world run by automatons doesn’t seem completely unrealistic anymore. It’s a bit chilling.” - Gemma Whelan

6.1. Benefits for societies

Artificial intelligence can help improve healthcare, make cars safer, and create customised, cheaper and more durable products and services. AI can also facilitate education and training - especially now that distance learning is at the forefront. Artificial intelligence can also use robots to make jobs safer and create new ones as industries using AI continue to evolve and grow.

6.2. Opportunities for businesses

Artificial intelligence can enable businesses to develop new products and services, especially in sectors where European companies already have a strong position: green economy, mechanical engineering, healthcare, or tourism. It can improve machine maintenance, increase production performance and quality, improve the quality of customer service and save energy for businesses.

6.3. Artificial intelligence in public services

Artificial intelligence used in public services can cut costs and offer new opportunities in public transport, education, energy and waste management. It can also improve the sustainability of products. With data-driven security systems, AI can help prevent misinformation and cyber-attacks and access quality information.

6.4. Safety

Artificial intelligence is projected to be increasingly used in both crime prevention and justice, as mass data sets can be processed faster, and crimes or even terrorist attacks can be predicted and prevented better. Online platforms are already using it to detect illegal online behaviour. Artificial intelligence can also be used for defence and attack strategies against hacking and phishing.

Machine learning by GDJ in Pixabay

6.5. AI in Education

It is becoming increasingly crucial for young people to acquire the basics of digital literacy as early as possible in education. One of the skills is the ability to solve problems, which, in a broader context, will be of paramount importance to them in the job market, whether we are talking about doing tasks or doing business development.

One of the most glaring examples of the application of artificial intelligence in education is Jill Watson. Students at the Georgia University of Technology did not even realise that artificial intelligence was one of their teaching assistants for five months. The robot, named Jill Watson, was built on IBM Watson software and was deployed at the university by Professor Ashok Goel for testing purposes.

Jill Watson helped graduate students manage their affairs and responded to their emails and forum posts. The students said that the letters from the digital assistant seemed to be ordinary and accurate messages, often using slang many times.

“The students had no idea until they were told - and many were shocked.” – Daily Mail

Most of the students did not notice the thing at all; only a few realised that the letters were always answered within a few minutes. One student even speculated that he might be a simple, friendly teaching assistant just writing his doctorate.

Photo by Andrea De Santis on Unsplash

6.6. AI and healthcare

Artificial intelligence has also played a significant role in healthcare in recent years. Here, the creation of devices that exclude the possibility of human error or special access to parts that are inaccessible by human intervention is one of the driving forces of robotics.

In healthcare, time efficiency, and thus the relief of doctors and nurses and the precision of diagnostic procedures are all guidelines that require the most exploration of the possibilities of artificial intelligence.

7. Risks of using artificial intelligence

“Artificial intelligence will reach human levels by around 2029. Follow that out further to, say, 2045, we will have multiplied the intelligence, the human biological machine intelligence of our civilisation a billion-fold.” - Ray Kurzweil

Each coin has two sides: in addition to the many benefits listed above, let’s look at the dangers of using artificial intelligence. Perhaps the greatest danger lies in the fact that humanity is not really aware of the dangers AI can pose to us.

The incredible pace of development in artificial intelligence is increasingly worrying, thought-provoking, and sometimes downright startling. Self-driving cars are already on the verge of everyday use, space tourism is a real thing, private space travel is getting ahead, and many of us are already playing games that use virtual reality.

Yet Google’s and Tesla’s self-driving cars are being tested in a live plant, government agencies have long ruled out the space market, and a boom in space tourism is imminent.

Photo by Possessed Photography on Unsplash

7.1. Who is to blame for the damage caused by AI?

One of the biggest challenges with technology is determining who is responsible for the damage caused by a device or service powered by artificial intelligence. For example, in an accident involving a self-driving car, should the damage be covered by the owner, car manufacturer or programmer?

If a manufacturer is not accountable, it can reduce people’s trust in technology, but regulation can also be too strict and stifle innovation.

7.2. The impact of AI on jobs

Perhaps one of the most pressing questions about artificial intelligence is how its spread will affect the labour market: will the proliferation of AI make human workers obsolete?

- The global AI market is predicted to reach a $190.61 billion market value in 2025

- The forecasted AI annual growth rate between 2020 and 2027 is 33.2%.

- By 2030, China will be the world leader in AI technology

- The lack of trained and experienced staff is expected to restrict the AI market’s growth.

- In 2022, companies are expected to have an average of 35 AI projects in place

- The use of digital assistants worldwide is expected to double, reaching 8.4 billion by 2024.

- 52% of people are confident that due to robust AI technologies, cybersecurity is not a threat when sharing personal information online.

- By 2025, growing job demand for 97 million people will be needed for jobs such as AI and machine learning specialists, process automation specialists, big data specialists, and more.

We have seen many times throughout history that tasks humans previously did have been automated with the advancement of technology. Following this line of reasoning, we can rightly assume that artificial intelligence is expected to lead to the loss of many jobs.

However, recent research by an MIT working group painted a more optimistic picture in their report “Artificial Intelligence And The Future of Work” about this topic. According to the report, artificial intelligence will drive massive innovation, which presupposes many highly skilled workers in entirely new jobs. Also, AI is expected to create better jobs, so education and training will play a crucial role in providing a skilled workforce. As explained in the executive summary:

“For the foreseeable future, therefore, the most promising uses of AI will not involve computers replacing people, but rather, people and computers working together…” – MIT Work of the Future

The modern industrial economy has generally become dependent on computers and is increasingly dependent on AI programs. Apparently, these programs have made a lot of workers unemployed, but in fact, mechanisation using artificial intelligence has so far created more jobs than it has eliminated. Artificial intelligence increases the pace of technological innovation, but it also forces workers to follow ever-accelerating processes.

7.3. Risks to fundamental rights

If not handled properly, artificial intelligence can lead to wrong decisions, or data on ethnicity, gender, and age can influence decisions when renting out a property or even dismissing it. It can also affect your right to privacy and data protection. It can be used, for example, in facial recognition devices or for online tracking and profiling.

The EU has already prepared its first set of rules to address the opportunities and threats of artificial intelligence, which focuses on building trust in artificial intelligence. The EU aims to make Europe a global hub for reliable artificial intelligence.

Artificial intelligence by Geralt in Pixabay

7.4. Deepfakes as the most severe crime threat

According to a new UCL report, experts have classified fake audio or video content as one of the most worrying uses of artificial intelligence, given its potential for crime or terrorism. Fake content is difficult to detect, stop or neutralise. At the same time, fake content can be used very easily and effectively to influence large masses or artificially provoke mass hysteria (which spreads mainly in crises and during a pandemic like a wildfire).

In addition to fake content, the crimes supported by artificial intelligence and of most significant concern are the use of autonomous vehicles as weapons, the development of more personalised phishing messages, the disruption of artificial intelligence-driven systems, the collection of online information for large-scale extortion, and artificially written fake news.

7.5. I may raise questions about the possibility of prosecution

The use of AI systems also raises issues of prosecution. This question may be most acute concerning AIs used in medicine. The fundamental question is if a doctor relies on the judgment of an AI-driven system or a team of medical experts to make a diagnosis or life-saving decisions, which can be held responsible for the error?

7.6. Is AI the end of the human race?

Can the success of artificial intelligence mean the end of the human race? The imagination of countless sci-fi writers has long been preoccupied with the question of whether robots or robot-human cyborgs can run amok?

Virtually any technology has the potential to cause harm in the wrong hands. However, in the case of artificial intelligence and robotics, these wrong hands can even belong to the technology itself. The main danger many see is that robots will be indispensable to humans and, with their self-learning ability, will gain a high level of intelligence that already exceeds natural human intelligence.

Sophia awakens

8. Artificial intelligence and cybersecurity

“Achieving cybersecurity is a journey and not a destination. The Coalition’s blueprint provides the rationale for course corrections in face of new threats brought by emerging technologies such as cloud computing, robotics, AI and blockchain where “security by design” is the foundational practice to follow.” - Dr Marco Mirko Morana

8.1. AI as a threat to cybersecurity

We can read in countless places about how high the cyber threat is and how common cyber attacks are. Today, it is clear to everyone that we are facing a severe problem. The situation is getting worse: the frequency and sophistication of cyber attacks and the damage they cause are increasing.

However, the question arises: can we use the potential of AI to mitigate and prevent cyberattacks?

There is a massive potential in using artificial intelligence, and its areas of application are almost inexhaustible. In this chapter, we are looking for the answer as to how the potential of AI can be harnessed for the benefit of cyber defence.

More and more organisations are seeing artificial intelligence and machine learning as technologies that take some of the burdens off of security teams by automating routine tasks and reviewing vast amounts of security-related data. According to IT and security executives, detecting and identifying potential threats is an ideal initial use case for AI and automation.

One of the biggest challenges for security teams is the exponential growth of huge amounts of data and its monitoring and management. The more and more systems we monitor, checking who logged in and when or what they downloaded and when the problem will no longer be whether we know if something has happened, but whether we notice when something unusual happens. And “unusual” can be both an unusual user or system behaviour or a false alarm.

Constant monitoring and data analysis require many highly qualified professionals, but this workforce lacks cybersecurity.

Data mining by GDJ in Pixabay

The effectiveness of AI-based solutions can also be measured by how quickly they detect an intrusion. Artificial intelligence does not sleep, go on vacation, need coffee breaks, unlike analysts at security operations centres, who can get tired of reading endless log files.

Artificial intelligence can help system and network administrators in more and more organisations. AI-based solutions can also be used to speed up and improve the behavioural analysis of user log files, which helps identify potential security events and increase the efficiency of multi-factor authentication.

Behavioural analysis performed by AI can help prevent attacks by malware because AI-based antivirus solutions focus on events and their patterns, not virus signatures, so they can quickly identify malicious code and abnormal behaviour.

While artificial intelligence and automation play a critical role in reducing congestion in IT security teams, organisations will continue to need well-trained professionals to perform high-level analytics and maintain systems: automation and machine learning increase efficiency, yet there will still be a great need for human expertise, logical thinking, and creativity to maximise the benefits of technology and address threats.

There is a legitimate concern about artificial intelligence and automation because cybercriminals can also use them to launch more extensive and effective attacks than ever before. What is certain is that hackers are already experimenting with these technologies. Using and developing AI is a double-edged sword because AI is exceptionally instructive, breaking into systems faster and smarter than traditional penetration or vulnerability testing systems.

Cyber-attackers use all the means at their disposal to circumvent the protection of organisations. They also include artificial intelligence, which, when deployed, can analyse cyber defence mechanisms and employee behaviour patterns. Machine learning can be used to deceive AI-based defence systems by flooding training models with false data. Artificial intelligence requires training, vast amounts of data, and simulated attacks. It does not protect against real threats until it can identify them accurately.

Evolution by Geralt in Pixabay

Artificial intelligence can also help in the area of data protection by preventing unwanted end-user behaviour and thus data leakage and unauthorised access.

Integrating AI into cybersecurity systems is not an easy task. Business leaders need to see the challenges of deployment and push to develop solutions that increase the effectiveness of security programs while being ethical and protecting privacy. You need to be aware that using AI technologies comes with responsibilities. Tracking research findings, modelling potential threats, and adhering to general guidelines are essential if we use artificial intelligence in cybersecurity programs in the future.

Hackers can send emails to a specific target group, such as bank customers, in order to obtain their data, making well-known phishing more targeted, or just more realistic, more credible with the help of artificial intelligence. In addition to phishing, artificial intelligence can also be used to blackmail and falsify evidence.

Artificial intelligence and machine learning are becoming increasingly important for information security today. These technologies can quickly analyse millions of data sets and track a wide range of cyber threats.

Artificial intelligence can make cyber attacks much more effective, where machine learning can quickly map the vulnerabilities of a system.

8.2. AI as a helper of cybersecurity

Detecting new threats

Artificial intelligence can be used to detect cyber threats and potentially malicious activities. Traditional software systems simply cannot keep up with the number of new malware generated each week, so AI can significantly help this area.

Sophisticated algorithms can be used to teach AI systems to detect malware, run pattern recognition, and detect the slightest behaviour of malware or extortion before it enters the system. Artificial intelligence enables superior predictive intelligence and may collect data independently through articles, news, and studies on cyber threats. In this way, without human intervention, AI can keep up with the changing threats and respond to them in a very short time.

With artificial intelligence, cybersecurity professionals can reinforce the best cybersecurity practices and minimise the attack surface instead of constantly monitoring malicious activity.

Photo by Alex Knight from Pexels

Breach risk prediction

Artificial intelligence systems can easily map the inventory of IT devices on a network and provide accurate records of all devices, users, and applications with different access levels to different systems.

Thanks to the continuous collection and analysis of data related to cyber threats and knowing the set of tools on your network, AI-based systems can predict what and how is most likely to be compromised on your network. With this handy piece of information, you can plan and allocate resources to the most vulnerable areas.

Better endpoint protection

The number of devices used for telecommuting is proliferating, and AI is playing a pivotal role in securing these endpoints against cyberattacks.

One of the most significant advantages of AI over antivirus programs is that AI is based on a non-virus signature database, which must be kept up-to-date if new threats are detected. It can cause concern if the virus definitions are missing due to failing to update the antivirus solution. So if a new type of malicious attack occurs, the signature protection may not be able to defend against it.

On the other hand, AI pays attention to patterns, unusual behaviours and is constantly “improving” itself. If something unusual happens, artificial intelligence can signal and take action - be it sending a notification to a technician or even restoring a safe state after a ransomware attack. This provides proactive protection against threats instead of waiting for the signature to be updated.

AI by Geralt on Pixabay

Battling bots

Artificial intelligence-based cybersecurity systems can provide the latest insights into global and industry-specific threats. Automated threats cannot be overcome with purely manual responses. AI and machine learning help you gain a thorough understanding of website traffic and differentiate between good robots (such as search engine crawlers), bad robots, and humans.

8.3. Disadvantages of AI in cybersecurity

Besides the many benefits listed above, the factors and circumstances that may be disadvantages of using AI should not be overlooked.

Organisations would need more resources and financial investment to build and maintain an artificial intelligence system. In addition, “training” and preparing AI systems to protect against cyber-attacks requires adequate expertise, not to mention the time and money required to achieve results.

For AI systems to work properly, they need a large amount of data to learn from. All of this requires investments that most organisations cannot afford. AI systems can display incorrect or false-positive results without vast amounts of data. Obtaining inaccurate data from an unreliable source can even backfire.

Another major drawback is that cybercriminals can use artificial intelligence to analyse malicious programs and launch more advanced attacks.

9. Robots, morality, ethics

“We must address, individually and collectively, moral and ethical issues raised by cutting-edge research in artificial intelligence and biotechnology, which will enable significant life extension, designer babies, and memory extraction.” - Klaus Schwab

With the development of artificial intelligence, virtual and robotic assistants will perform more complex tasks. For these “intelligent” machines to be considered safe, reliable, interoperable, and perhaps even ethical, robots must be able to quickly assess the situation and apply human societal standards.

This task is much more complicated than teaching the rules of AI systems to simpler tasks such as tagging images, detecting spam, and making appropriate decisions.

The behaviour of robots — even very sophisticated and complex, intelligent, autonomous robots — is still determined by their creators, who create their control algorithms. And while experts say we are still a long way from making robots ethical agents (meaning human-like creatures capable of forming opinions and expressing emotions on their own), they can already take on a few human-like qualities soon, just as humanoid robots already exist to display and express emotions.

Let us not believe that these issues are not yet timely, and we will only have to deal with them in the distant future: ethical regulation in this area is already needed.

In a traffic situation where an accident is unavoidable, an autonomous car must decide whether to wade itself (and thus protect the lives of its passengers) or rather the pedestrian who suddenly steps in front of the car. Who is responsible for the decision if a personal injury occurs?

And that is an even more manageable ethical problem, at least from a programming standpoint. The more interesting question is whether robots and artificial intelligence can become truly human-like ethical agents over time. Can they act according to principles, will they have virtues, will they be able to give a moral explanation for their actions?

And once the AI reaches that level sometime in the future, how will it look at itself? What will its self-esteem and character be like? Will there be anxious, stubborn, sensitive, passionate, lazy, bad-tempered, or ambitious robots?

Artificial intelligence by Comfreak in Pixabay

And if so, how should we humans view these robots? Can we get to the point where robots are no longer valuable only as tools but also as moral beings, and if not, do they not deserve the same respect and protection as humans?

It sparked a big echo in 2018 when Jeff Bezos posted on Twitter that he took a walk with his dog named SpotMini in the garden of the Parker Hotel, which hosted the MARS conference in Palm Springs, California.

Amazon founder Jeff Bezos takes a walk with SpotMini

We can, of course, ask the poetic question: can there ever be such an intimate relationship between a robotic dog and a human as between a human and a living dog? Will SpotMini ever consider Jeff Bezos its ‘master, or will it remain at the level of an ‘algorithm-based’ robot dog?

Will a robot dog ever be able to feel its owner’s mood swings when its owner is sad, happy, desperate, or angry? Will it sneak up to its master without question if the master is ill, or only if the master gives an order to do it through a tablet? But we can also approach this from the other side: how will the robot dog express its ‘feelings’: if it is excited and wants to go for a walk? Anyway, will it require to be walked in the open air?

Boston Dynamics have further developed SpotMini, and even the most advanced humanoid robot has been created under the name Atlas.

It is difficult to predict the future. In any case, few would have thought in the ’90s that someone would walk a robot dog in 2018. Probably everything can be solved, as technology and human thinking itself are evolving, and things unimaginable for us may become a reality in the future.

However, the question remains whether this direction is good or very dangerous.

10. AI in Luxembourg

“Luxembourg’s vision is one in which AI weaves smoothly into the fabric of society – improving the lives of all citizens and strengthening our activities as a nation and member of the global community.” – Xavier Bettel, Prime Minister, Minister for Digitalization

Developing a strategic vision for artificial intelligence in Luxembourg has become a national priority. This vision remained not just an idea, an action soon followed it, and the vision took shape in the study entitled Artificial Intelligence: A Strategic Vision for Luxembourg.

This strategic vision rests on 3 pillars:

- Ambition 1: Become one of the most advanced digital societies in the world, especially in the EU

- Ambition 2: Become a data-driven and sustainable economy

- Ambition 3: Support people-centered AI development

Luxembourg is firmly committed to achieving these goals and has the funding, the ecosystem and the infrastructure to reach them in the short and long term.

Perhaps one of the most important findings of the study is that no matter what goals float in front of us, the individual should be at the heart of all AI services supported by the Luxembourg government.

To achieve these objectives and the above ambitions, the strategy sets out several policy recommendations in the following areas (as per AI Watch: Luxembourg AI Strategy Report):

- Enhancing the skills and competencies in the field of AI and providing opportunities for lifelong learning;

- Supporting research and development of AI, transforming Luxembourg in a living lab for applied AI;

- Increasing public and private investments in AI and related technologies;

- Fostering the adoption and use of AI in the public sector;

- Strengthening opportunities for national and international networks and collaborations with strategic partners in AI;

- Developing an ethical and regulatory framework, with particular attention for privacy regulation and security to ensure transparent and trustworthy AI development;

- Unleashing the potential of the data economy as a cornerstone of AI development.

In order to facilitate the development of a common strategy and vision for AI, 20,000 randomly selected Luxembourg residents received a letter at the end of 2021 from the Prime Minister inviting them to participate in a public consultation on artificial intelligence.

This 20-page questionnaire gathered a variety of opinions that provide guidance for the nation’s future AI policy. The comprehensive and representative survey developed by the Luxembourg Institute of Socio-Economic Research (LISER) achieved a response rate of 12%, collecting feedback from all age groups over 16 years.

The main results of the consultation, in a nutshell, are as follows:

- Over 80% of the public thinks that the state needs a data ethics committee.

- When asked which sector they trusted most with data and AI, the public sector won, with 77% of respondents voicing a high or very high level of trust.

- 70% of respondents believe that AI can help them with their daily lives.

- 73% of people use digital tools for public sector tasks (online payments, MyGuichet.lu). Coming in second was mobility (Google Maps, Waze).

- More than 70% of respondents favour full-scale, AI-based trials, such as Luxembourg’s digital twin project.

The full report can be read here in French.

11. General AI statistics

“I am telling you, the world’s first trillionaires are going to come from somebody who masters AI and all its derivatives and applies it in ways we never thought of.” - Mark Cuban

As it can be read in the study Artificial Intelligence: A Strategic Vision for Luxembourg, AI could contribute up to € 13.33 trillion to the global economy in 2030. To imagine this amount somewhat, it is more than the current performance of China and India combined.

By 2030, China is predicted to become the world leader in artificial intelligence technology, with a global market share of 26.1%. As a result, the Asia-Pacific region will have the highest compound annual growth rate by 2025.

The use of AI systems will benefit the development of economies: global GDP is projected to be up to 14 per cent higher in 2030 due to artificial intelligence, equivalent to an additional € 15.7 trillion.

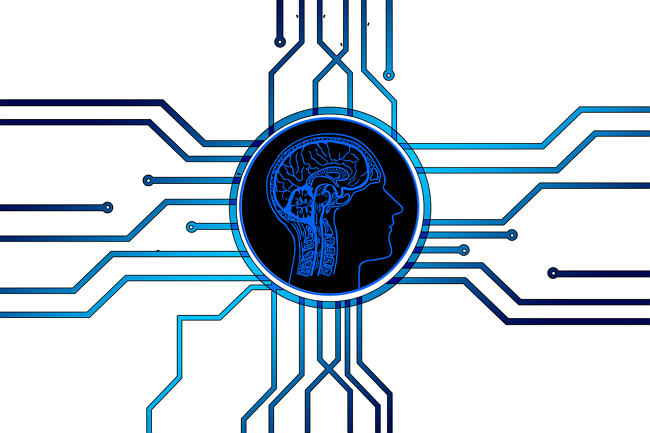

Global corporate AI investment is also rising steadily: it rose to nearly $ 68 billion in 2020, a 40 per cent increase from the previous year.

The Stanford Institute for Human-Centered Artificial Intelligence has published a report entitled Measuring trends in Artificial Intelligence, which details the trends related to artificial intelligence in 2021 through 7 different chapters.

Global corporate investments in AI (Source)

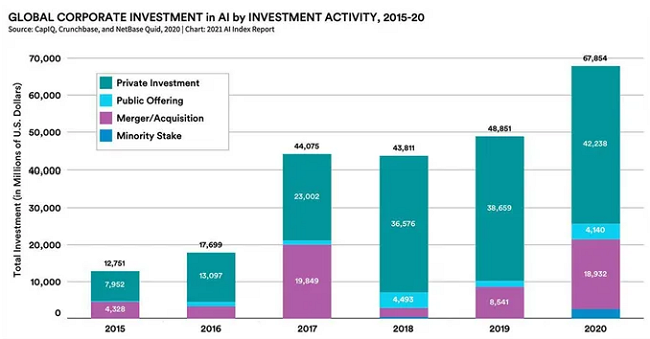

Companies are continuously increasing their use of AI devices in telecommunications, financial services and the automotive industry. However, most companies seem unaware of the risks associated with this new technology. When they were asked in the McKinsey survey (on the State of AI in 2020) what risks they consider relevant, only cyber security was registered by more than half of respondents.

Risks from adopting AI that organisations consider relevant in 2020 (Source)

Nevertheless, due to the low and fragmented level of investment in artificial intelligence, the EU is behind the United States and cannot be considered a global leader in artificial intelligence. However, European countries are determined to change this situation and invest more and more in AI.

The three most important challenges companies face when implementing AI are staff skills (56%), fear of the unknown (42%), and finding a starting point (26%).

12. The eight biggest AI trends in 2022

“Artificial intelligence would be the ultimate version of Google. The ultimate search engine that would understand everything on the web. It would understand exactly what you wanted, and it would give you the right thing. We’re nowhere near doing that now. However, we can get incrementally closer to that, and that is basically what we work on.” - Larry Page

In the below video, Bernard Marr summarises the eight biggest AI trends for 2022 as follows:

1. AI will augment our workforce: more jobs will be outsourced to AI. By outsourcing a large amount of repetitive, dull, or strenuous work, people have more time to do activities that may still be better than AI: works that need creativity or emotional intelligence.

2. Better language modelling capability: machines will be able to understand and communicate better with us. OpenAI is further developing GPT3, and an improved version of GPT4 will be released soon.

3. Cyber security: The World Economic Forum’s Global Risks Report identified cybercrime as a more significant risk to society than terrorism. AI can be used with great success against cyber attacks, as it is very good at analysing network traffic, quickly learning and recognising patterns that could be malicious attacks.

4. AI and the metaverse: Metaverse is a digital environment where users can work and play together. It is a network of 3D virtual worlds that focus on social connections. AI may play a key role in developing this further shortly.

5. Low-code and no-code AI: Instead of using programming skills to create good-looking web interfaces, AI will help the general public create websites and applications without the need of having programming skills. This will democratise artificial intelligence technology in 2022 and will give a further push to its wide adaptation.

6. Data-centric AI: Instead of a vast amount of datasets, AI will focus on higher quality data that is labelled and can be used in machine learning to develop better technologies.

7. Autonomous vehicles: It is predicted that autonomous cars will become increasingly effective and reach their full self-driving capability this year.

8. Creative AI: We will increasingly use AI to accomplish various creative ideas and products such as newspaper and article headlines, create and design logos, or produce infographics.

Bernard Marr – YouTube video

13. Conclusions

“I’m increasingly inclined to think that there should be some regulatory oversight, maybe at the national and international level, just to make sure that we don’t do something very foolish. I mean, with artificial intelligence, we’re summoning the demon.” - Elon Musk warned at MIT’s AeroAstro Centennial Symposium

Our digital world creates an unprecedented amount of data every day. Data alone is not much of a significance. However, if we can understand the relationships between the data and put it at our service, data will become invaluable.

By putting data at our service and creating intelligent technologies, we can create a data-driven society in which artificial intelligence will be the channel to turn immeasurable amounts of data into immediate, meaningful and justified executable responses.

Among the many benefits of using artificial intelligence is the automation of cumbersome or time-consuming processes, taking personalised services to a new level, which will change our lives and world radically in the future.

The future has arrived, and the question is not whether AI can be avoided but how we put it at our service and how we can become the winner of AI solutions.

Artificial intelligence has many advantages in many areas, one of which is cybersecurity. With rapidly evolving cyberattacks and the rapid proliferation of devices that exist today, artificial intelligence and machine learning can help keep up with cybercriminals, automate threat detection, and respond more effectively than traditional software-driven or manual techniques.

AI is an increasingly essential technology to improve the performance of IT security teams. Instead of and in addition to tiring and constant manual system management, the use of AI technologies is becoming more and more inevitable. AI performs the necessary analysis and threat identification that security professionals can use to minimise the risk of attacks and improve security. AI can also help identify and prioritise risks, respond directly to incidents, and identify malicious attacks before they occur.

In addition to the many benefits, there are, of course, dangers in using artificial intelligence. However, the question is not whether to use AI at all, as tremendous advances have already been made in its development, but instead how we can exploit its potential under well-controlled conditions in a way that makes the most of it.

As has already been done for the protection of personal data, a broad and generally accepted legal framework for AI should be established and applied in order to control the dangers of artificial intelligence and address the ethical and moral issues that may arise.

If we do, we can hope that this friendship will be lasting and fruitful:

Photo by Andy Kelly on Unsplash